Measuring Social Impact While Streamlining Operations

Schedule a Demo View Interactive Tour A Deep Dive into How Brands and Foundations Can Power Purpose-Driven, Data-Backed Scholarship and Grant Programs

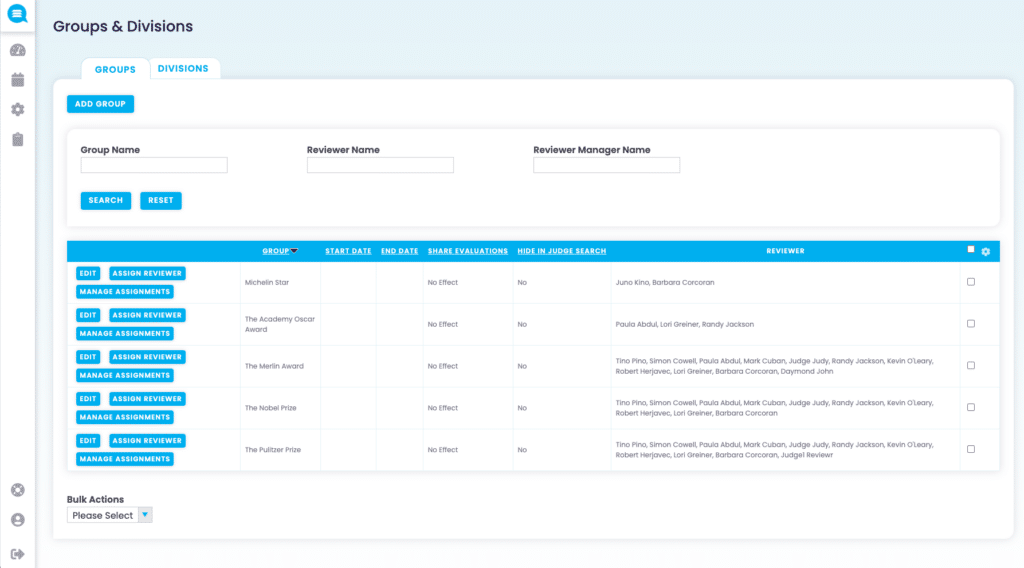

Assigning applicants to review teams

You’ve launched your website, collected online applications, and now its time to review them – easy right? Not so fast. How do you create a review workflow that is both efficient for your review committee thats also fair, equitable, compliant, and engaging? How do we ensure that the review committee has all the content they need to review, without overwhelming them. Let’s dive into the most common workflows in assigning applications to review teams.

Blinding Data

Blinding of applicant data from review teams has become increasingly popular with Reviewr customers. Often times this is day for 2 reasons.

What Data can be blinded?

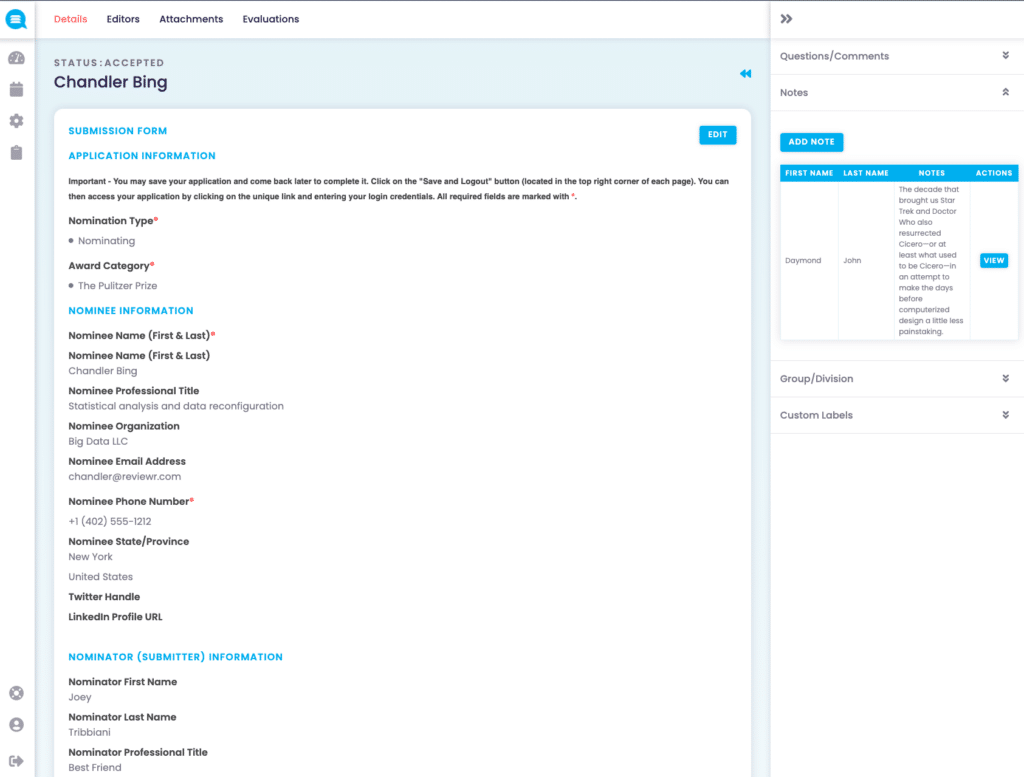

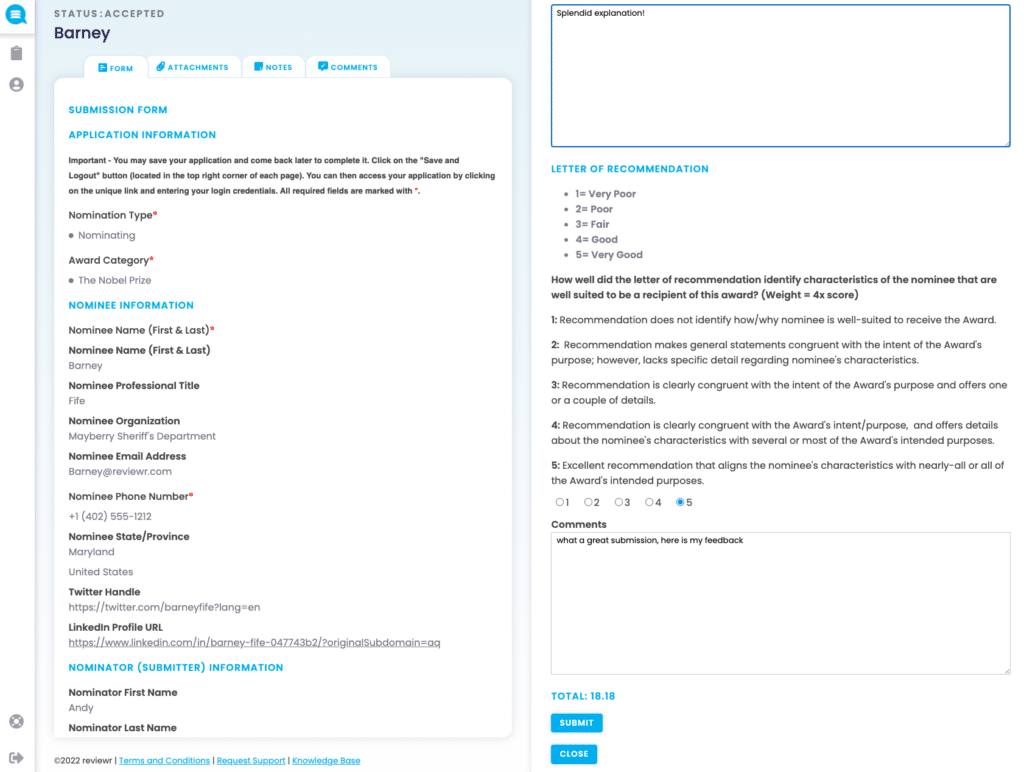

Granting access and conducting reviews

Submissions have been assigned to review teams. Check. Data has been blinded. Check. Now its time to begin reviewing.

First, let’s ensure that the review team has been added, and assigned.

Having an online review process is critical to ensuring both an efficient, and compliant, workflow. The use of tools like Reviewr not only streamline operations, but it eliminates a large percentage of human error when it comes to tabulating results and collecting evaluations.

Schedule a Demo View Interactive Tour A Deep Dive into How Brands and Foundations Can Power Purpose-Driven, Data-Backed Scholarship and Grant Programs

Schedule a Demo View Interactive Tour Insights from 500 scholarship provider interviews Bridging the Gap Between Mission and Execution Scholarship programs